A depthcam? A webkinect? Introducing a new kind of webcam

Update, 10 February: Sorry for some serious reliability issues over the last few days. The streaming server is now hosted in-house at CASA, which should be a lot more robust.

At CASA we’ve been looking at using a Kinect or three in our forthcoming ANALOGIES (Analogues of Cities) conference + exhibition.

We’ve been inspired in part by Ruairi Glynn‘s amazing work here at UCL, and Martin has been happily experimenting with the OpenKinect bindings for Processing.

Meanwhile, I recently got to grips with the excellent Three.js, which makes WebGL — aka 3D graphics in modern browsers — as easy as falling off a log. I’m also a big fan of making things accessible over the web. And so I began to investigate prospects for working with Kinect data in HTML5.

There’s DepthJS, an extension for Chrome and Safari, but this requires a locally-connected Kinect and isn’t very clear on Windows support. There’s also Intrael, which serves the depth data as JPEG files and provides some simple scene recognition output as JSON. But it’s closed-source and not terribly flexible.

The depthcam

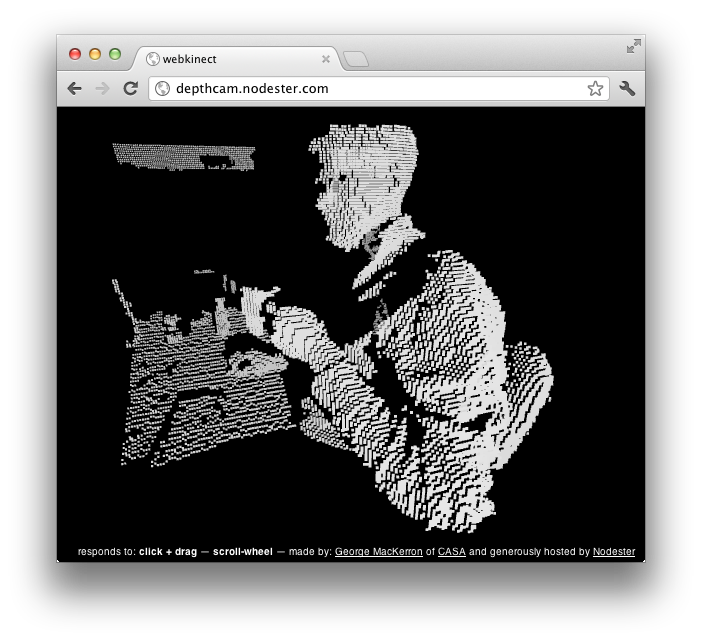

So I decided to roll my own. I give you: the depthcam!

Click here or on the screenshot to connect.

It’s a live-streaming 3D point-cloud, carried over a binary WebSocket. It responds to movement in the scene by panning the (virtual) camera, and you can also pan and zoom around with the mouse.

Currently you’ll need Google Chrome to try it, and the number of people who can tune in at once is limited for reasons of bandwidth. If you can’t connect, or nothing much is happening, try this short video on YouTube instead.

It might be the future of video-conferencing. It could also be the start of a new wave of web-based movement-powered games.

How it works

The code is on GitHub, and is in three parts:

- A short Python script wiring up the OpenKinect Python wrapper to the Autobahn WebSockets library. Depending on the arguments it’s run with, this can either serve a web browser directly, or it can push the depth data up to…

- A simple node.js server that gets us round the UCL firewall. This accepts depth data pushed from the Python script, and broadcasts it onwards (still using binary WebSockets) to any connected web browsers, which are running…

- The web-based client, written in CoffeeScript. This connects to the node.js server, receives the depth data, and visualises it as a particle system using Three.js and WebGL.

The incoming data from the Kinect is pretty heavy, at 18 MB/s (640 × 480 × 2 bytes per pixel x 30Hz). This is more than we can expect to (or afford to!) push over the Internet. So the Python script does some basic video compression to cut this down by several orders of magnitude, to 30 – 100 KB/s. It follows this three-step recipe:

- Reduce the amount of data by down-sampling and quantizing to 160 × 120 × 1 byte per pixel.

- Increase the data’s compressibility. First, reduce noise — which also looks bad — by making each transmitted depth value (i.e. pixel) the median of the values received in the last three frames. Then express each value as its difference from the previous value, with just an occasional absolute-value keyframe to allow new viewers to pick up the stream.

- Compress the data using LZMA, which gets better compression ratios than GZIP or BZIP2, and decompression times somewhere between the two.

Feel free to fork the code and do something great with it.

-

Email

-

Christopher Weir

-

Optimus Prime

-

Peter Lenagh

-

Anonymous

-

Amin Asadi

-

Anonymous

-

Alvaro

-

Anonymous

-

Lcardona

-

Julentxu

-

Anonymous

-

Anonymous

-

curious

-

Anonymous

-

Andrew

-

xeLL

-

Wizgrav

-

jawj

-

Wizgrav

-

BroadbandBlogger

-

Suhila Ahmed